News

XII Mesa Redonda do curso de Medicina da UNISM debate autoritarismo contra os direitos humanos

2022 Open a Free crypto trading Account on BYBIT in just 5 mins ✅ Start with just 1000 Rupees

Touqeer Art Website Presentation | Developed by The Graphic Power

A few words About The Graphic Power

Every Business is a Business of Interest to Us.

We created a service, a mechanism that provides you with graphic designing and web development at every hour of every day. We designed the service to provide to everyone; we reject no one. A startup or an already established business… we will provide our service to you.

Maybe a little too Person of Interest-esque? Well, in that case allow us to tell you a little more: we are young, passionate and driven. As a team, we hope to deliver cool and to the date web development, graphic designing and content writing services. From web page construction to logo designing to profile writing, we deliver enthusiastically – giving your business a start and a face it needs.

Drupal Association blog: Drupal Association At-Large Board Election 2022 – winner announced

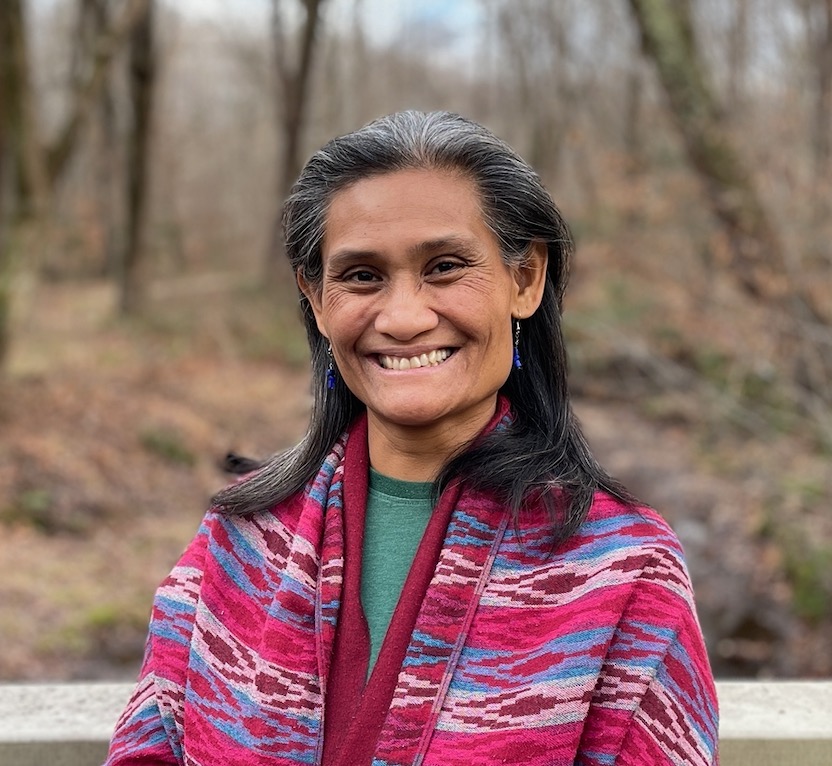

The Drupal Association would like to congratulate our newest At-Large board member:

Nikki Flores

When accepting her position, Nikki shared: “I am so grateful and feel truly honored for the opportunity to serve as your At-Large Board Member, and I pledge to bring all of my skills and talents to the role. For the next two years I’ll plan to work closely with the other board members, the association staff, the community, and all of you to make as many incremental improvements as possible. Part of the reason why I submitted my nomination is to make sure that people know that even without tremendous prior Drupal experience, it is still possible to make a measurable difference. I encourage you to help me and help our community — and the code that helps all of our projects move forward — by getting involved at www.drupal.org/community. You belong to the Drupal community.”

About Nikki

Nikki is currently a Certified ScrumMaster® (CSM) and technical project manager and co-owner at Lullabot, a strategy, design, and development agency supporting redevelopment/new rollouts for Fortune 500 brands, government agencies, higher education, publishers, and enterprise technology companies.

She loves the web and learned to code in 1999. Since 2004, she has worked on 200+ websites implementing Drupal for clients and employers, most recently IBM, New Relic, Green America, the GIST Network for the U.S. Department of State, and Changemakers for Ashoka: Innovators for the Public. She’s been using Drupal since 2008, working with agile methods since 2014, and received the CSM certification in 2020. She likes to think that we all, as makers and creators, are able to use technology to solve problems, connect communities, and build more effective change-making teams.

She is honored to be the recipient of the 2018 and 2019 NTENny Award and the 2021 Hack for Good award. She keynoted the first annual Drupal Diversity Camp and helped organize the 2022 Non-profit Industry Summit at DrupalCon. She is currently onboarding into the Drupal Community Working Group with a current effort of reviewing and making suggestions on an updated Drupal Code of Conduct. She also currently acts as a Pantheon Hero. She recently moved with her family, including three cats, to the Midwest.

Thank you to all 2022 candidates

On behalf of all the staff and board of the Drupal Association, a heartfelt Drupal Thanks to all of you who stood for the election this year. It truly is a big commitment to contribution, the Drupal Association, and to the community and we are so grateful for all of your voices. Thank you for your willingness to serve, and we hope you’ll consider participating again in 2023!

Detailed Voting Results

788 voters cast their ballots out of a pool of 2617 eligible voters (30%). This is a 50% increase in voter participation from 2021, with an additional 145 eligible voters added to the voting pool. An additional 10% of eligible voters voted in this election from 2021.

Under Approval Voting, each voter can give a vote to one or more candidates. The final total of votes was as follows

|

Nikki Flores |

372 |

|

Adam Bergstein |

365 |

|

Mark Dorison |

241 |

|

John Doyle |

171 |

|

Esaya Jokonya |

153 |

|

Bhavin Joshi |

140 |

These voting results were unanimously ratified by the Drupal Association Board.

Test which reminded me why I don’t really like RSpec

Add listening ports to firewalld with Ansible

Ansible’s community.general.listen_ports_facts module finds all listening TCP and UDP ports on your system. Read More at Enable Sysadmin

The post Add listening ports to firewalld with Ansible appeared first on Linux.com.

FreeBSD 12.4-RC1 Available

lightning @ Savannah: GNU lightning 2.1.4 release

GNU lightning 2.1.4 released!

GNU lightning is a library to aid in making portable programs

that compile assembly code at run time.

Development:

http://git.savannah.gnu.org/cgit/lightning.git

Download release:

ftp://ftp.gnu.org/gnu/lightning/lightning-2.1.4.tar.gz

2.1.4 main features are the new Loongarch port, currently supporting

only Linux 64 bit, and a new rewrite of the register live and

unknown state logic. Now it should be faster to generate code.

The matrix of built and tested environments is:

aarch64 Linux

alpha Linux (QEMU)

armv7l Linux (QEMU)

armv7hl Linux (QEMU)

hppa Linux (32 bit, QEMU)

i686 Linux, FreeBSD, NetBSD, OpenBSD and Cygwin/MingW

ia64 Linux

mips Linux

powerpc32 AIX

powerpc64 AIX

powerpc64le Linux

riscv Linux

s390 Linux

s390x Linux

sparc Linux

sparc64 Linux

x32 Linux

x86_64 Linux and Cygwin/MingW

Highlights are:

- Faster jit generation.

- New loongarch port.

- New skip instruction and rework of the align instruction.

- New bswapr_us, bswapr_ui, bswapr_ul byte swap instructions.

- New movzr and movnr conditional move instructions.

- New casr and casi atomic compare and swap instructions.

- Use short unconditional jumps and calls to forward, not yet defined labels.

- And several bug fixes and optimizations.

Deanna Davis attends IMARAÏS Beauty on the FL!P App launch party in Los Angeles | @deanna_davis

Broll footage: Deanna Davis attends Sommer Ray’s IMARAÏS Beauty on the FL!P App launch party held at Casita Hollywood in in Los Angeles, California USA on November 3, 2022. This video is available in high quality for editorial use only. All media and worldwide use” ©MaximoTV